Navigating Digital Fidelity: Unpacking é›™ å 信託 (Twin Trust) In The Information Age

In our increasingly interconnected world, where information flows ceaselessly across borders and devices, the integrity of digital communication is paramount. Every text message, email, financial transaction, and online document relies on an unspoken pact of accuracy between sender and receiver. This fundamental reliance on the faithful transmission and interpretation of data is what we refer to as é›™ å 信託, or "Twin Trust." It's the assurance that what was sent is precisely what is received, uncorrupted and correctly understood, forming the bedrock of all reliable digital interactions.

Without é›™ å 信託, the digital landscape would descend into chaos, rife with misinterpretations, lost data, and critical errors. From the seemingly minor inconvenience of garbled text to the severe implications for sensitive YMYL (Your Money or Your Life) data like medical records or financial statements, the breakdown of this trust can have profound consequences. This article delves into the intricacies of maintaining é›™ å 信託, exploring the often-overlooked world of character encoding, the challenges it presents, and the best practices for ensuring our digital communications remain clear, accurate, and trustworthy.

Table of Contents

- The Foundation of Digital Trust: What is é›™ å 信託?

- The Unseen Language: Understanding Character Encoding

- A Historical Perspective on Characters and Their Codes

- The Mojibake Menace: When é›™ å 信託 Breaks Down

- Preserving Linguistic Nuances: Accents and Special Characters

- Achieving é›™ å 信託: Best Practices for Data Integrity

- The Future of Digital Communication and é›™ å 信託

- Who Needs to Understand é›™ å 信託?

The Foundation of Digital Trust: What is é›™ å 信託?

At its core, é›™ å 信託 (Twin Trust) in the digital realm refers to the dual pillars of reliability that underpin all text-based data exchange. The first pillar is the sender's commitment to encoding information accurately, ensuring that the original characters are correctly translated into a digital format. The second, equally crucial pillar, is the receiver's ability to interpret and decode that digital format back into the intended characters without loss or distortion. When both sides uphold their part of this "twin trust," communication flows seamlessly. When one fails, the result can be anything from minor annoyance to catastrophic data corruption.

Consider a simple email. If the sender's system uses one method to represent a character like 'é' (e with acute accent) and the recipient's system tries to read it using a different, incompatible method, that character might appear as a strange symbol, a question mark, or a series of random letters. This seemingly small issue, often dismissed as a "glitch," is a direct failure of é›™ å 信託. In contexts where accuracy is non-negotiable—such as legal contracts, medical prescriptions, or financial transactions—such failures can lead to significant financial losses, legal disputes, or even endanger lives. This makes understanding and upholding é›™ å 信託 a critical aspect of not just technical proficiency but also ethical responsibility in the digital age, directly impacting YMYL scenarios.

The Unseen Language: Understanding Character Encoding

To truly grasp é›™ å 信託, we must first understand the invisible language of character encoding. At its most basic, character encoding is a system that assigns a unique numerical code to every character—letters, numbers, symbols, and even emojis—that we use in written communication. When you type a letter on your keyboard, your computer doesn't store the letter 'A' directly; it stores its numerical representation. When that data is sent across the internet, it's those numbers, or "bytes," that are transmitted.

- Dose For Your Liver

- Where Can I Watch The Truman Show

- Who Is Kylie Jenner Dating

- Carla Gugino Movies And Tv Shows

- Employee Of The Month

Historically, various encoding systems emerged, often tied to specific languages or regions. ASCII, for instance, was an early standard for English characters. As the internet grew and global communication became the norm, the limitations of these localized systems became glaringly apparent. How do you represent Chinese characters, Arabic script, or Cyrillic letters alongside English ones? This challenge led to the development of more comprehensive systems, with Unicode emerging as the dominant universal standard. As the data states, "Unicode is a character encoding system that assigns a code to every." It's designed to encompass virtually all characters from all the world's writing systems, providing a consistent way to represent text globally. When using modern systems, "You will automatically get utf bytes in each format," referring to UTF-8, UTF-16, or UTF-32, which are different ways of encoding Unicode characters into sequences of bytes for storage and transmission. UTF-8, in particular, has become the de facto standard for web content due to its efficiency and backward compatibility with ASCII.

A Historical Perspective on Characters and Their Codes

The journey of character representation is a fascinating one, highlighting the complexities that contribute to the challenges of é›™ å 信託. Consider the character 'Æ' (lowercase 'æ'), a perfect example of how linguistic and historical factors influence encoding. The provided data notes: "Æ in helvetica and bodoni æ alone and in context æ (lowercase, Æ) is a character formed from the letters a and e, originally a ligature representing the latin diphthong ae." This ligature, born from the practicalities of typesetting, evolved beyond a mere stylistic choice. "It has been promoted to the status of a letter in some languages, including danish, norwegian, icelandic, and faroese." This elevation to a distinct letter means it's not just 'a' followed by 'e'; it's a single, unique character that requires its own specific code in an encoding system.

The data further illustrates its historical presence: "It was also used in both old swedish, before being replaced by ä, and old english, where it was." This historical usage, and its subsequent replacement or continued use in specific languages, underscores the dynamic nature of written language and the constant need for encoding systems to adapt. The linguistic nuances are also highlighted: "Before other consonants, æ occurs but rarely, mostly when there is a related word with å , e.g, Væpne, væske (from våpen, våt), In such words there is usually no phonetic distinction from e , thus [ˈveːpnə], [ˈvɛskə] (the latter merging with veske)." This shows how pronunciation and etymology can influence character usage, even if the phonetic distinction is lost in modern speech. For é›™ å 信託 to hold true, systems must not only recognize 'æ' as a distinct character but also correctly render it, regardless of its linguistic context or the specific font being used.

The Mojibake Menace: When é›™ å 信託 Breaks Down

The most visible manifestation of a broken é›™ å 信託 is "mojibake"—the garbled, unreadable text that appears when characters are not displayed correctly. It's a digital linguistic jumble, often resembling random symbols or sequences of Latin letters with odd accents. This happens when text encoded in one character set is interpreted using another, incompatible character set. The raw bytes are there, but the "trust" in their interpretation has failed, leading to a breakdown in communication.

Decoding the Chaos: Common Encoding Mismatches

The provided data offers numerous real-world examples of mojibake, illustrating precisely where é›™ å 信託 can falter. One common scenario is attempting to read text without knowing its original encoding: "I've tried googling around but wasn't able to find what charset that this text below belongs to..." This highlights a frequent problem for users and developers alike—receiving data without explicit encoding information, making correct interpretation a guessing game.

More specific examples from the data demonstrate typical conversion failures:

- "由月è| 好好å-|ä1 å¤©å¤©å ‘ä¸Š: 大部分字符为各种符号: 以 iso8859-1 方式读取 utf-8 编码的中文: 拼音码: óéÔÂòaoÃoÃѧϰììììÏòéÏ: 大部分字符为头顶带有各种类似声调符号的字母: 以 iso8859-1 方式读取 gbk 编码的中文: 问句码: 由月要好好学习." This vividly shows what happens when UTF-8 encoded Chinese characters (which are multi-byte) are mistakenly read as ISO-8859-1 (a single-byte encoding). The result is a stream of seemingly random symbols and accented Latin letters, a complete loss of the original meaning. The same applies to GBK Chinese read as ISO-8859-1.

- "如这样一段乱码串“ËÎТÄÐ,是否有解决方案可把其还原成gb2312编码对应的汉字?" This is a direct plea for a solution to revert garbled text back to its original GB2312 encoding, a common issue for those dealing with legacy Chinese systems.

- "南拥夏栀@北梦木兮: 你好!请问java-web中servlet跳转jsp出现以下中文乱码å° æ ¬ç ç½ ä¸ ä¹¦å ç ¨æ ·ï¼ ã ã ä¸ºäº è®©å¤§å®¶æ æ ´å¥½ç è´ç ©ä½ éª ï¼ 3æ 25æ ¥èµ·ï¼ å½ æ ¥è¾¾ä¸ å ¡å ³å° é» å± å ç å çº§ï¼ 可能是什么什么属于问题啊!" This illustrates a common development problem in Java web applications where character encoding is not correctly handled between server-side (Servlet) and client-side (JSP), leading to garbled Chinese characters. These examples underscore that mojibake is not just an aesthetic issue; it's a fundamental failure in the digital chain of é›™ å 信託.

The Impact on Critical Information

The failure of é›™ å 信託, particularly in the form of mojibake, can have severe ramifications, especially in YMYL (Your Money or Your Life) contexts. Imagine a financial statement where account numbers or currency symbols are rendered incorrectly. A simple encoding error could lead to incorrect transactions, misdirected funds, or a complete inability to reconcile accounts. In healthcare, patient names, diagnoses, or medication dosages could become unreadable, potentially leading to medical errors with life-threatening consequences. Legal documents, which rely on precise language and accurate representation of names and terms, could be rendered invalid or lead to misinterpretations in court.

Beyond these direct impacts, the erosion of trust in digital systems can have broader societal implications. If users constantly encounter garbled text, their confidence in online services, digital government platforms, or even news sources can diminish. This loss of faith can hinder digital adoption, impede economic growth, and create barriers to information access, undermining the very premise of a globally connected digital society. Ensuring é›™ å 信託 is not merely a technical detail; it's a societal imperative for maintaining the reliability and trustworthiness of our digital world.

Preserving Linguistic Nuances: Accents and Special Characters

The richness of human language extends far beyond the basic Latin alphabet. Many languages, from French to German, Spanish to Vietnamese, rely heavily on diacritics (accents, umlauts, cedillas) and special characters to convey precise meaning and pronunciation. For é›™ å 信託 to be truly global, encoding systems must flawlessly handle these linguistic nuances.

The provided data lists several such characters: "ä latin small letter a with diaeresis, Ã¥ latin small letter a with ring above, æ latin small letter ae, ç latin small letter c with cedilla, è latin small letter e with grave, é latin small letter e with acute, Latin small letter e with." These are not mere decorative additions; they are integral parts of words. For example, in French, the difference between 'cote' (coast/rating) and 'côté' (side) is significant, and the accent is crucial. The data explicitly states: "French letters with accents (à, â, é, è, ù…), French letters with accents, such as à, é, è, ù, are an essential part of the french language and can greatly affect the pronunciation of a word, There are three accents in french, The acute accent, which appears on the letter e (é)." If these accents are lost or corrupted due to encoding issues, the meaning of the word can be altered, pronunciation can be incorrect, and the integrity of the text is compromised. This highlights the importance of comprehensive encoding standards like Unicode, which provide unique code points for each of these characters, ensuring they can be faithfully represented across different systems and applications, thereby upholding é›™ å 信託.

Achieving é›™ å 信託: Best Practices for Data Integrity

Ensuring é›™ å 信託 is a shared responsibility, requiring diligence from developers, content creators, and even everyday users. It involves adopting consistent standards, employing careful practices, and utilizing the right tools to verify data integrity.

Developer and User Strategies

For developers, the golden rule is consistency: always use UTF-8. It's the most widely supported and flexible encoding, capable of representing virtually all characters. Explicitly declaring the encoding in web pages (e.g., using `` in HTML) and in database configurations is crucial. When dealing with data conversion, understanding the source and target encodings is vital. The provided data demonstrates the pitfalls: "编码: utf-8 ==> iso-8859-1 = 对å è¿ å°±æ ¯ä¸ ä¸ªæµ è¯ å é æ¯ 编码: GB2312 ==> GBK = 对啊这就是一个测试啊逗比 编码: GB2312 ==> UTF-8 = һ ". This shows that converting UTF-8 to ISO-8859-1 results in mojibake, while converting GB2312 to GBK (a compatible superset) works correctly, and converting GB2312 to UTF-8 also results in mojibake unless handled with care. This underscores that direct, unmanaged conversions between incompatible encodings are a primary source of lost é›™ å 信託.

For users, while less technical, awareness is key. If you encounter garbled text, report it to the website or application developer. When creating content, especially if it includes special characters or multiple languages, ensure your software is set to save in UTF-8. For instance, when typing

- Leverkusen Vs Bayern

- Days Of Our Lives Spoilers

- The Senators Son Full Movie

- Aflac Duck

- Employee Of The Month

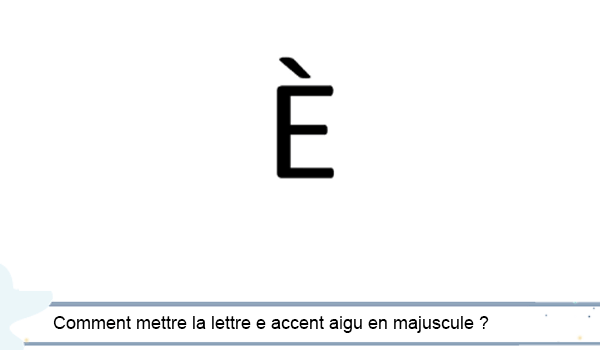

Comment faire des É majuscules avec accent

![Les accents é - è - ê [Astuce N°17] - YouTube](https://i.ytimg.com/vi/E5vGYyuaDTY/maxresdefault.jpg)

Les accents é - è - ê [Astuce N°17] - YouTube

Comment écrire é majuscule (É) au clavier ? Tuto à suivre